Hardaware Loop¶

- class HardwareLoop(scenario, manual_triggering=False, plot_information=True, iteration_priority=IterationPriority.DROPS, record_drops=True, **kwargs)[source]¶

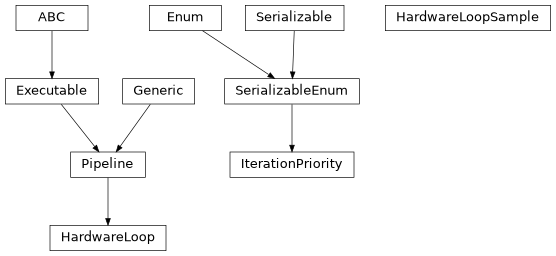

Bases:

Generic[PhysicalScenarioType,PDT],Pipeline[PhysicalScenarioType,PDT]Hermespy hardware loop configuration.

- Parameters:

scenario (

TypeVar(PhysicalScenarioType, bound=PhysicalScenario)) – The physical scenario being controlled by the hardware loop.manual_triggering (

bool) – Require a keyboard user input to trigger each drop manually. Disabled by default.plot_information (

bool) – Plot information during loop runtime. Enabled by default.iteration_priority (

IterationPriority) – Which dimension to iterate over first. Defaults toIterationPriority.DROPS.record_drops (

bool) – Record drops during loop runtime. Enabled by default.

- add_dimension(dimension)[source]¶

Add a new dimension to the simulation grid.

- Parameters:

dimension (

GridDimension) – Dimension to be added.- Raises:

ValueError – If the dimension already exists within the grid.

- Return type:

- add_plot(plot)[source]¶

Add a new plot to be visualized by the hardware loop during runtime.

- Parameters:

plot (

HardwareLoopPlot) – The plot to be added.- Return type:

- add_post_drop_hook(hook)[source]¶

Add a post-drop hook.

- Parameters:

hook (

Callable[[TypeVar(PhysicalScenarioType, bound=PhysicalScenario),Console],None]) – The hook to be added. The hook must accept the scenario and console as arguments.- Raises:

ValueError – If the hook is already registered.

- Return type:

- add_pre_drop_hook(hook)[source]¶

Add a pre-drop hook.

- Parameters:

hook (

Callable[[TypeVar(PhysicalScenarioType, bound=PhysicalScenario),Console],None]) – The hook to be added. The hook must accept the scenario and console as arguments.- Raises:

ValueError – If the hook is already registered.

- Return type:

- evaluator_index(evaluator)[source]¶

Index of the given evaluator.

Returns: The index of the evaluator.

- new_dimension(dimension, sample_points, *args, **kwargs)[source]¶

Add a dimension to the sweep grid.

Must be a property of the managed scenario.

- Parameters:

dimension (

str) – String representation of dimension location relative to the investigated object.sample_points (

List[Any]) – List points at which the dimension will be sampled into a grid. The type of points must be identical to the grid arguments / type.*args (

Tuple[Any]) – References to the object the imension belongs to. Resolved to the investigated object by default, but may be an attribute or sub-attribute of the investigated object.**kwargs – Additional keyword arguments to be passed to the dimension. See

GridDimensionfor more information.

- Return type:

Returns: The newly created dimension object.

- run(overwrite=True, campaign=None, serialize_state=True)[source]¶

Run the hardware loop configuration.

- Parameters:

overwrite – Allow the replacement of an already existing savefile.

campaing – Name of the measurement campaign.

serialize_state (

bool) – Serialize the state of the scenario to the results file. Enabled by default.

- Return type:

Returns: The result of the hardware loop.

- property iteration_priority: IterationPriority¶

Iteration priority of the hardware loop.

- manual_triggering: bool¶

Require a user input to trigger each drop manually

- plot_information: bool¶

Plot information during loop runtime

- property post_drop_hooks: list[Callable[[PhysicalScenarioType, Console], None]]¶

List of post-drop hooks.

Post-drop hooks are called after each drop is generated and can be used to add additional actions not natively supported by the hardware loop.

- property pre_drop_hooks: list[Callable[[PhysicalScenarioType, Console], None]]¶

List of pre-drop hooks.

Pre-drop hooks are called before each drop is generated and can be used to add additional actions not natively supported by the hardware loop.

- record_drops: bool¶

Record drops during loop runtime

- class HardwareLoopSample(drop, evaluations, artifacts)[source]¶

Bases:

objectSample of the hardware loop.

Generated during

HardwareLoop.run().- property evaluations: Sequence[Evaluation]¶

Evaluations of the hardware loop sample.

- class IterationPriority(value, names=None, *, module=None, qualname=None, type=None, start=1, boundary=None)[source]¶

Bases:

SerializableEnumIteration priority of the hardware loop.

Used by the

HardwareLoop.run()method.- DROPS = 0¶

Iterate over drops first before iterating over the parameter grid.

- GRID = 1¶

Iterate over the parameter grid first before iterating over drops.